Introduction

This project presents an end-to-end object detection AI, including training and model inference with hardware acceleration by Neural Processing Units (NPUs).

For this project, the TensorFlow Lite library was used both for creating an object detection model that recognizes Toradex SoMs and for inferring this model using a Toradex Verdin SoM. This inference involves object detection using a camera.

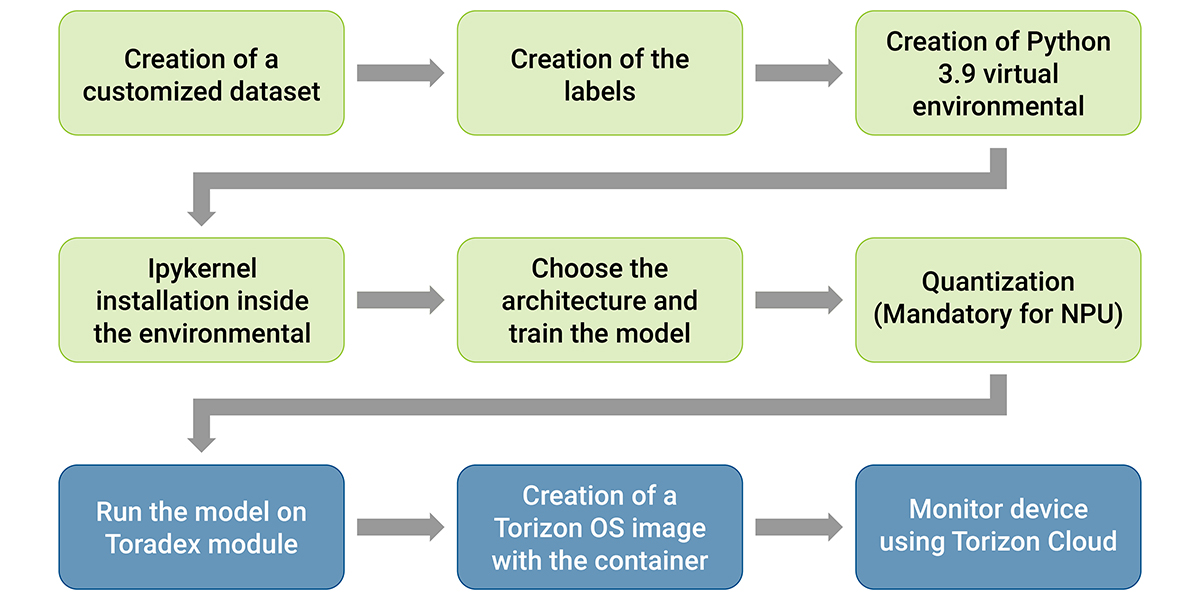

Based on this, the following steps were followed:

- Training a model using a custom dataset containing photos of Toradex System on Modules. This step is made on the host computer.

- Execution of the inference on Toradex SoM, which can be processed either by the CPU or by the NPU. If opting for the NPU, this application is responsible for installing all packages responsible for delegating this processing to the NPU.

It was noticed that when the model was processed by the NPU, there was a decrease of about 12 times in the inference time, that is, using NPU increases 12 times the FPS of the image processing pipeline. This is already expected, as it is a processing unit dedicated to neural network applications.

Why choose a SoM with an NPU?

This application will be done using a large video pipeline, which requires a computational system capable of processing camera images and performing AI inference. This demands high processing power both from the CPU, responsible for handling the camera and part of the AI processing, and from a possible NPU, which will process a large portion of the inference and alleviate the CPU's workload.

Up to the time of the creation of this blog, Toradex had three modules with NPU: the Verdin iMX8M Plus, iMX95, and the Aquila AM69. It was decided to conduct the tests with the iMX8MP because it has the lowest processing power among the three, so if it is possible to run the project on the one with the lowest power, it will be possible to reproduce it with those with higher processing power.

Also, the Verdin iMX8M Plus has an integrated NPU of 2.3 TOPS (trillion operations per second) provides hardware acceleration for AI tasks, allowing efficient processing of inferences. Additionally, it features a 4-core ARM Cortex-A53 processor clocked at 1.8 GHz, offering a combination of high performance and energy efficiency to handle complex real-time tasks, such as object detection using a camera.

Finally, this SoM (System on Module) is available with the fully integrated Torizon platform, accelerating product development and maintenance. With features including highly secure and reliable remote updates, device monitoring, and remote access. Integration with tools like the VSCode extension for Torizon and TorizonCore Builder speeds up the development of applications like this, facilitating deployment implementation and debugging of potential errors.

Why choose SSD MobileNet V2?

Normally, AI model training is done on a powerful machine, even using multiple GPUs for immense computational power, without limitations on power usage or time (training can take days). On the other hand, model inference is usually done on devices with limited power and computational resources, like mobile and embedded devices. Even with NPUs for inference, power consumption remains an issue, potentially causing overheating and performance loss.

Therefore, the most efficient approach for implementing these models on embedded devices is to focus extensive computational power on training the AI, while prioritizing performance during model inference. For this purpose, the "SSD MobileNet V2" architecture was the most suitable, offering the best performance among the studied options.

Finally, this approach results in a model with high performance when needed for high FPS inference on embedded devices.

Materials and Methods

Test setup information:

- System on Module: Verdin iMX8M Plus Quad 4GB Wi-Fi / Bluetooth IT V1.1A

- Carrier board: Yavia V1.1A

- Torizon OS version: 6.5.0

Below is a flowchart outlining the step-by-step process for executing this project. Blue rectangles represent steps performed on the host PC, while orange boxes represent steps related to the Toradex SoM.

Label your dataset

You can run this project out of the box, this way an example dataset containing Toradex modules will be downloaded during the training. It will perform training with the “ssd-mobilenet-v2“ architecture and return a TensorFlow Lite (.tflite) file. If you don’t want to label your custom dataset and just try an example dataset, you should skip this section.

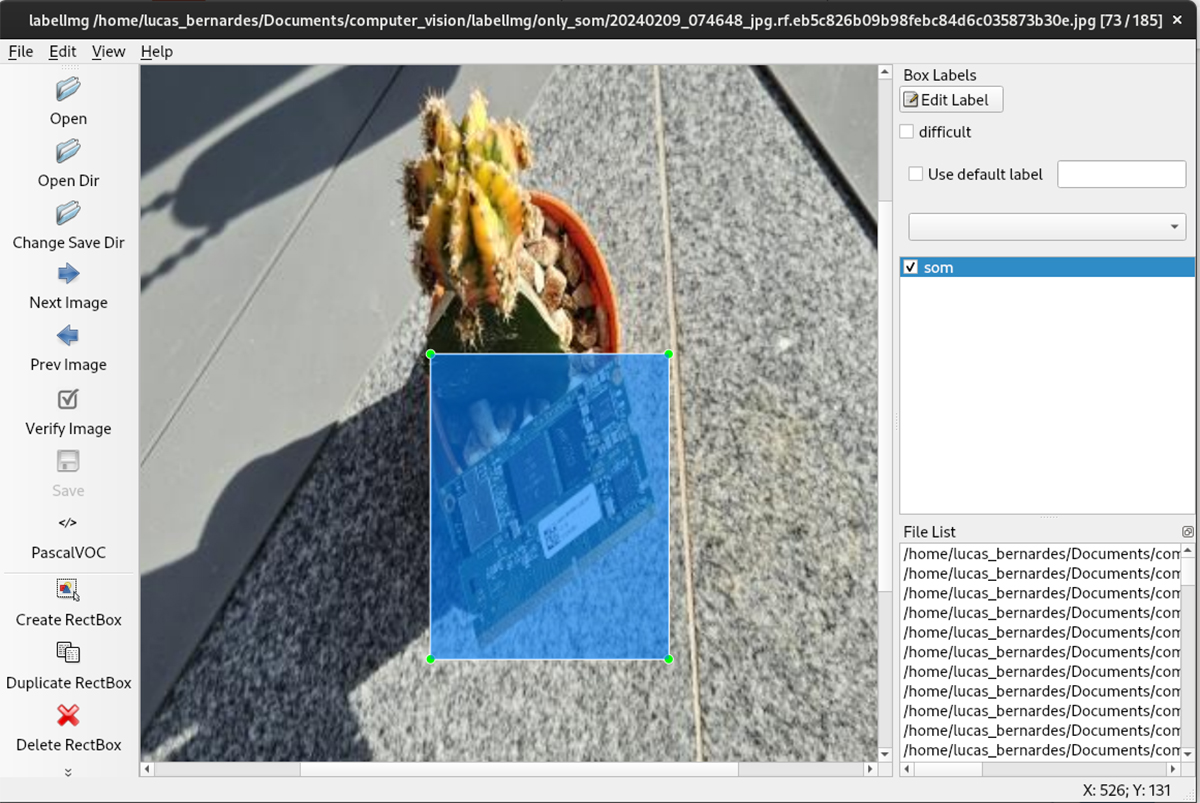

If you want to train your custom dataset, it’s recommended to use labelImg to label your images. You can install it using:

pip install labelImg

Then run labelImg just typing in your bash:

labelImg

In the labelImg program, make sure to save the annotations in PascalVOC. Below is an image of how labels are created.

Label your dataset

The annotations must be in the same folder as the images, like this:

tree only_som_dataset

your_workspace$ tree only_som_dataset/ only_som_dataset/ ├── 2016-01-20-15-42-56_jpg.rf.74b304f8df03664962178894f8b0c748.jpg ├── 2016-01-20-15-42-56_jpg.rf.74b304f8df03664962178894f8b0c748.xml ├── 2016-01-20-15-43-01_jpg.rf.7d54031dca45539e1c06dd3e3a5f9989.jpg ├── 2016-01-20-15-43-01_jpg.rf.7d54031dca45539e1c06dd3e3a5f9989.xml ├── 2016-04-14-11-03-24_jpg.rf.e1cd8cdeb105b39ee8b66de8534e339e.jpg ├── 2016-04-14-11-03-24_jpg.rf.e1cd8cdeb105b39ee8b66de8534e339e.xml ... 1 directory, 370 files

You will need to put your labels in alphabetic order when loading the dataset.

Create and Set a Virtual Environment

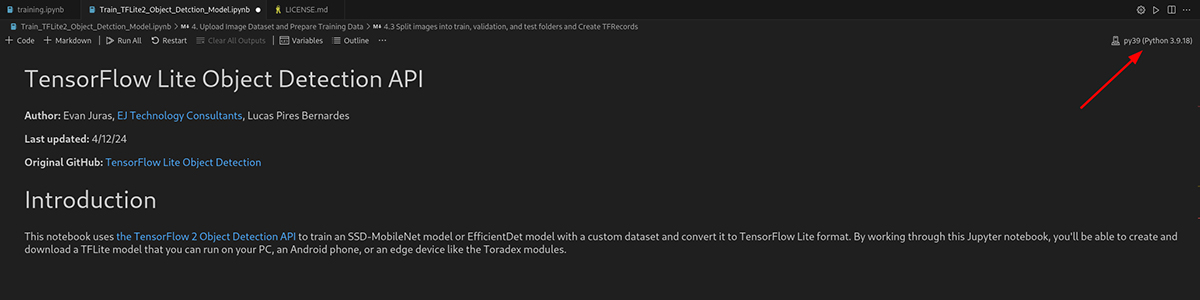

The training script only works on Python versions below 3.9. So, it’s recommended to use Conda to install a virtual environment in another version of Python, since using Conda is the easiest way to do this.

Please follow Installing Conda to install Conda, then follow Managing Environments to create a virtual environment with Python 3.9.

After activating your virtual environment with Python 3.9, install the ipykernel package within the virtual environment to run the Jupyter Notebook.

pip install ipykernel

Now, you can run the training script. First, clone the training repository to your computer:

git clone git@github.com:lucasbernardestoradex/TrainingTFLiteToradex.git

Inside VSCode, remember to change your virtual environment to the one you created using Conda.

Then select your Python environment created.

Run the Training Script

Now, you can run the Jupyter Notebook. It will generate a .tflite file, which you can test in the final of the notebook and will be used later to run this model in Toradex modules.

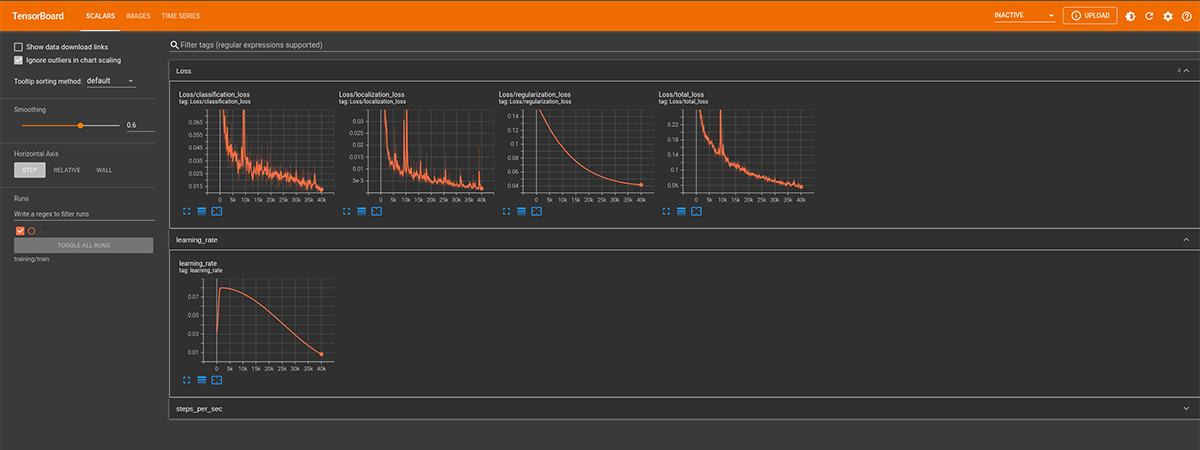

Below is an example of the training logs for an "ssd-mobilenet-v2-fpnlite-320" architecture in tensorboard using a dataset of Toradex modules, running using the training repository code.

Run the Model on Toradex Modules

To run the model on the Toradex modules, you can refer to Torizon Sample: Real Time Object Detection with Tensorflow Lite page and follow the instructions for inference. By default, this project uses a standard quantized model, you can change the .tflite and labelmap.txt files to the one created with this project to use your custom dataset. Note that to infer this model on the NPU of modules that have it, it is necessary to quantify the model.

We recommend keeping the following in mind:

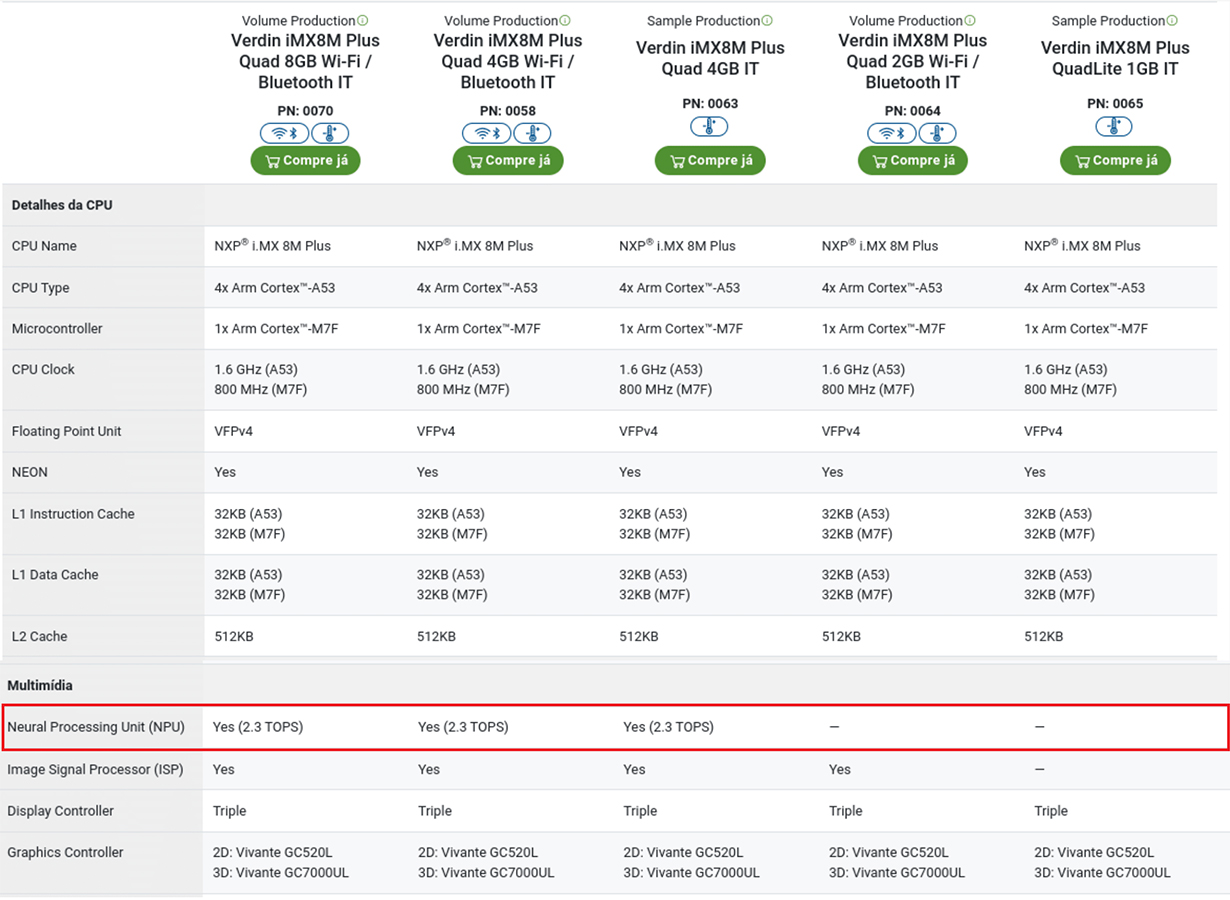

- Before attempting to run on the NPU, make sure that your module has one. This can be verified by selecting your module family and observing the features in Toradex Computer on Modules webpage

- For example, the image below shows the features of the Verdin iMX8M Plus modules, where we can see that only three of the variants have NPU

- Be sure to select the correct camera ID

Thermal Conditions

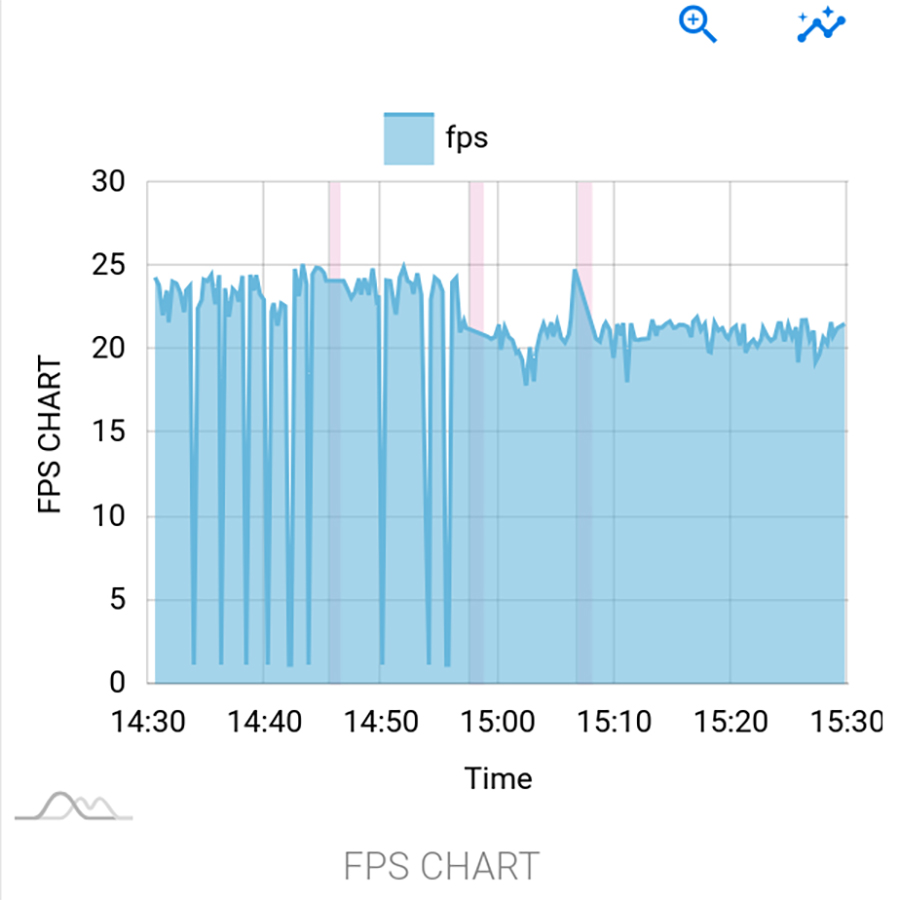

During model execution on the Toradex modules, throttling issues were observed due to high power consumption. The FPS graph shows sudden drops in FPS that are characteristic of this problem, which can be addressed through two options:

Heat Sink Installation: This involves attaching a heat sink to the processor to increase its surface area for heat dissipation. This improves heat transfer from the processor to the surrounding environment, reducing throttling caused by excessive heat.

Changing CPU power profile: The CPU power profile can be changed from ondemand to powersave (refer to CPU Governor). This lowers the CPU clock to its minimum frequency, resulting in reduced power consumption and potentially resolving the throttling issue. This approach was tested in an environment with a controlled temperature of 24 ºC, and as seen in the FPS graph (obtained from torizon.io), the FPS drops stopped after half of the time, coinciding with the implementation of this solution.

Comparison Between Different Hardware Delegations

From the inference result, it was possible to measure the performance of the lightweight inferences for the CPU, GPU, and NPU. Additionally, using htop in the SoM Toradex terminal, it was possible to obtain the parameters of CPU usage, load average (5 minutes), and memory usage (MB). Finally, the latency was calculated by measuring the time between a movement and the corresponding appearance of that movement on the screen. To do this, the movement was recorded in slow motion, and the time it took for this to happen was observed frame by frame. The results are presented in the Conclusion section.

Bundling this Application Into a Torizon OS image

First, refer to Installing TorizonCore Builder website to install TorizonCore Builder on the host PC. After this, refer to Using TorizonCore Builder to create your TorizonCore Builder repository.

Then, you will need the inference production docker-compose.yml to insert into the TorizonCore Builder and create a custom Torizon image containing your container. Then, you will need the production docker-compose.yml file into the Torizon OS image. To that end, you can use the docker-compose.yml file from the TFLite RTSP repository project downloaded in the "Run the model on Toradex modules" section.

Copy the docker-compose.yml file from the TFLite RTSP repository to your TorizonCore Builder repository. Remember that the USE_HW_ACCELERATED_INFERENCE environmental variable determines whether inference will be NPU accelerated.

Finally, refer to Building The Custom Image of Torizon OS to create your custom image of Torizon OS and install it on your device.

How to Monitor Your Device Using Torizon Cloud

First, you’ll need to provision your device on Torizon Cloud: Device and Fleet Management.

In the object-detection.py of the TFLite RTSP repository, you can create this section related to saving the FPS measurement in a file located in the /tmp directory:

# Save the fps count in /tmp to be used by Device Monitoring in Cloud fps = 1/(t2-t1) file_path = "/tmp/fps.txt" with open(file_path, 'w') as file: file.write(str(fps))

Following the instructions detailed in Customizing Device Metrics for Torizon Cloud we can add the following section above the [OUTPUT] of the /etc/fluent-bit/fluent-bit.conf file on your Toradex SoM:

[INPUT]

Name exec

Tag fpscount

Command echo "{\"fps\":\"$(cat /tmp/fps.txt)\"}"

Parser json

Interval_Sec 15

[FILTER]

Name nest

Match fpscount

Operation nest

Wildcard *

Nest_under custom

Then, run the objectDetectionTorizon container to create the /tmp/fps.txt file and restart the fluent-bit service:

sudo systemctl restart fluent-bit

Remember to run the TFLite RTSP container to create the /tmp/fps.txt file and update the fps counter.

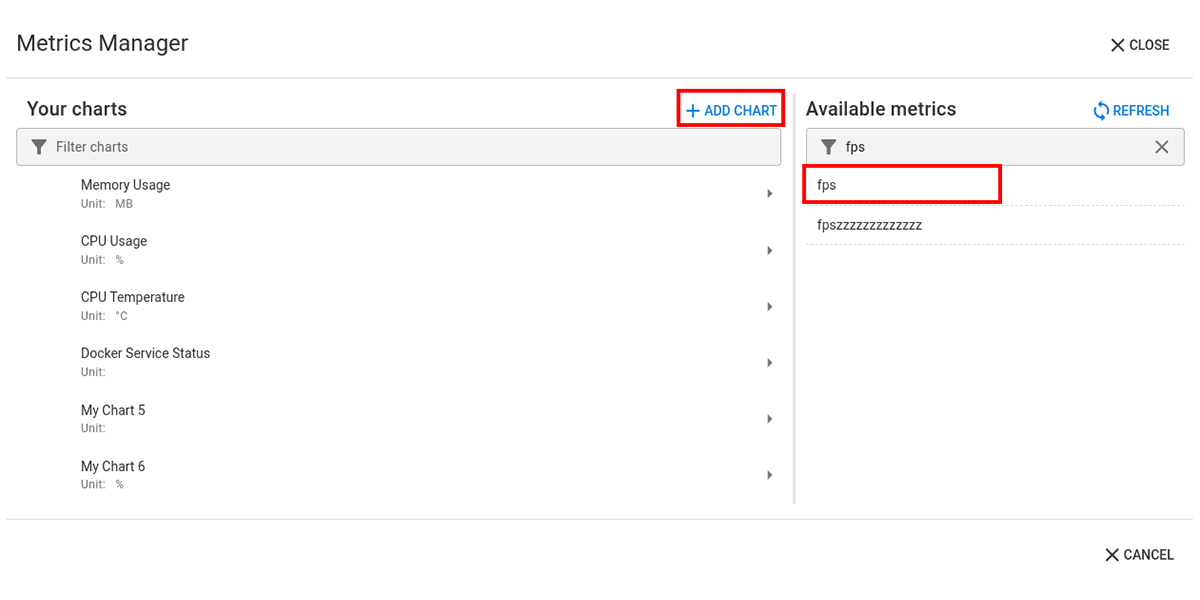

To add the metric to the platform, first click on "Devices" in the left sidebar, select your device, go to "View Details." In the top bar, go to "Device metrics," then "Customize Metrics." You will then see the screen below. Your custom metric should appear in "Available metrics," highlighted in red. To start monitoring it, create a chart (highlighted in red) and select your metric.

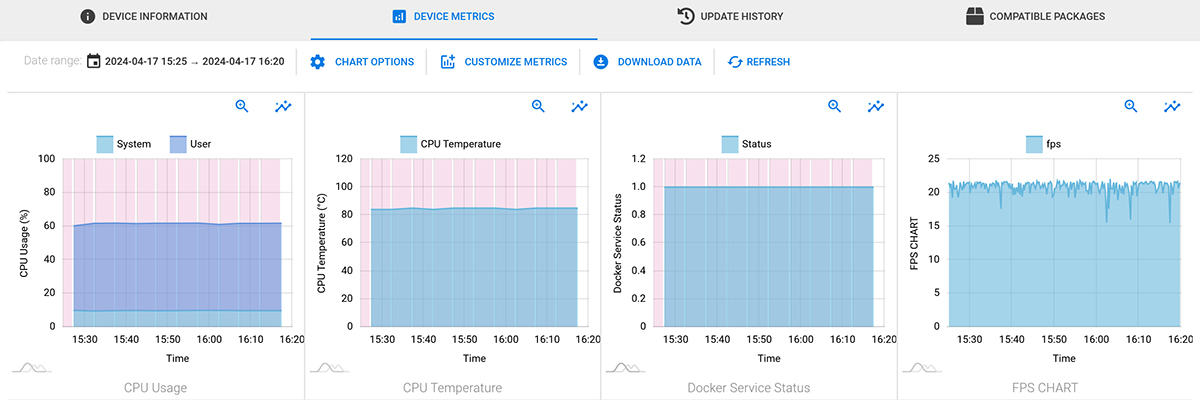

Below is an example of metric monitoring, including the custom FPS metric.

Conclusions

The table below shows a performance comparison between inference on CPU, GPU, and NPU when using the "ssd-mobilenet-v2-fpnlite-320" architecture. In addition, using the "EfficientDet-Lite" architecture results in inferior performance, approximately four times lower.

| Hardware Inference |

FPS |

CPU usage |

Load average (5 min) |

Memory usage (MB) |

Latency (ms) |

|---|---|---|---|---|---|

| GPU | 1.13 | 5.45 | 0.52 | 659 | 5925 |

| CPU | 2.34 | 28 | 0.85 | 583 | 2850 |

| NPU | 28.57 | 34.8 | 1.45 | 643 | 375 |

A significant performance improvement of approximately 12x FPS can be observed on the NPU compared to the CPU, as the NPU is specialized hardware for this type of data processing. However, there was also an increase in CPU charge. On the other hand, quantization did not enhance CPU or GPU performance using TensorFlow Lite 2, making it unnecessary when inference is intended solely for them.

Below are three videos that demonstrate the inferences on the GPU, CPU, and NPU, respectively, where the performance gain on the NPU can be clearly observed. Very high accuracy can also be observed in SoM identification.

Note that it was possible to recognize the Toradex module with a dataset of only 185 images, both in controlled environments (white background, for example) and in uncontrolled environments (real backgrounds, such as an office).

Furthermore, if a professional application is desired, it is advisable to increase the size of the training dataset and diversify it by adding photos of the desired object in different positions and with different backgrounds, for example. However, since this project is a demonstration, it is impractical to create such a large dataset.

A potential study that could be conducted is on the performance difference between TensorFlow Lite versions 1 and 2 using quantization. A reference for conducting this study can be found at TensorFlow Lite v1 Object Detection API in Colab, remembering that this project uses TensorFlow Lite version 2. In addition, other architectures can be tested to evaluate the performance difference, such as YOLO and Faster R-CNN.

References

Training TFLite Toradex: Object detection AI training repository using TensorFlow Lite version 2. Enables the creation of quantized models using "ssd-mobilenet-v2" and "EfficientDet-Lite" architecture for NPU execution.

TFLite RTSP: Repository for inferring the trained model on Toradex hardware using a video captured from a USB camera. The model can be inferred on both the CPU and NPU, the project provides scripts to work on both ways.

VOC Dataset: Example training dataset, containing a directory with part of a VOC dataset and part of our own photos of Toradex modules. It also contains a zipped folder containing only the Toradex modules. All images in this repository have their labels annotated in Pascal VOC format (.xml)

Appendix I - Concepts

In this section, important concepts in the field of Artificial Intelligence will be explained, to clarify the reader about possible doubts about what these topics are addressed throughout the text.

Dataset: A dataset is a collection of data that will be used to train Artificial Intelligence, allowing it to learn patterns, make predictions, and perform intelligent tasks. In this case, it is a set of real photos that will be used for AI learning, so that later it can identify these objects on its own.

Labels: Labels in datasets are additional information associated with the dataset that functions as explanatory labels to help the AI understand what the data represents and learn patterns. In the context of image datasets, they allow the identification of the position of an object in an image. In general, they inform 2 coordinates that are the opposite vertices of a rectangle, where the object being identified is located. They are necessary for training an AI model, allowing the AI to identify where the objects in the dataset are. Examples of label formatting include PascalVOC and Create ML.

AI training: Also known as machine learning, is the process of teaching an artificial intelligence (AI) model to perform a specific task. Through training, the model learns to identify patterns and relationships in a dataset, allowing it to make predictions or autonomous decisions.

Architectures: AI training architectures define the structure and design principles used in building artificial intelligence (AI) systems. Each approach has its own strengths and weaknesses, and the choice of the ideal architecture depends on the specific task, the available data, and the computational resources. Examples of AI architectures for object detection are SSD MobileNet V2 and EfficientDet Lite.

Inference: Also known as computational reasoning, is the process where a trained artificial intelligence (AI) model uses its knowledge to generate results on new, unseen data. It's like the model takes what it learned during training (analyzing a vast amount of examples) and applies that knowledge to solve real-world problems. In this case, the model takes what it learned using the labeled dataset and uses this to perform object detection in a camera video.

Quantization: A technique used to reduce the size and computational complexity of AI models, particularly neural networks. It involves reducing the precision of the numerical representations used within the model, typically from 32-bit floating-point numbers to 8-bit integers. This reduction in precision can lead to a significant decrease in the model's size, making it more efficient for deployment on devices with limited computational resources, such as mobile phones and embedded systems. This quantization is particularly useful when deploying a model on an NPU, as this hardware only performs operations on 8-bit integers.

NPU: While the CPU is also able to process all types of data, the NPU is optimized for simultaneous matrix operations, meaning it is capable of handling multiple operations simultaneously in real-time. NPU is specially designed for IoT AI to accelerate neural network operations and solve the problem of the inefficiency of traditional chips in neural network operations. The NPU processor includes modules for multiplication and addition, activation functions, 2D data operations, decompression, etc. The multiplication and addition module is used to calculate matrix multiplication and addition, convolution, dot product, and other functions.

The NPUs improve energy efficiency and performance, making them essential for battery-powered applications. It is designed for 8-bit integer operations, which makes quantization mandatory.